What Is AI Reasoning?

Artificial intelligence reasoning is the process where machines simulate logical thought to draw conclusions, solve problems, or make decisions. While many AI systems rely on statistical models, reasoning aims to go beyond pattern recognition by applying structured logic. This article outlines the technical foundations of AI reasoning, key types, approaches, and real-world applications in machine learning and knowledge systems.

What Does AI Reasoning Mean Technically?

In technical terms, AI reasoning refers to the use of logic-based systems, rule-based inference engines, or structured representations to deduce new facts or decisions from existing data or knowledge.

It differs from pattern-based AI systems like deep neural networks in that reasoning systems often involve:

- Symbolic representations (e.g., ontologies, knowledge graphs)

- Formal logic (e.g., propositional logic, predicate logic)

- Inference mechanisms (e.g., modus ponens, resolution, abduction)

- Rules and constraints

These systems attempt to simulate cognitive processes such as deduction (deriving conclusions from rules and facts), induction (inferring rules from examples), and abduction (inferring the most likely cause).

Types of AI Reasoning

Deductive Reasoning

Deductive reasoning applies general rules to specific situations to reach conclusions. For example, given the rule:

Html

And the fact:

Html

A deductive system would infer that a penguin can fly — unless there's an exception rule (e.g., penguins cannot fly). Deductive systems are used in formal logic programming and expert systems.

Inductive Reasoning

Inductive reasoning generalizes from specific examples. For example, if a system observes that all observed swans are white, it might infer that all swans are white. This is common in machine learning models where rules are inferred from data.

While not always correct, inductive methods are probabilistic and central to many supervised learning systems.

Abductive Reasoning

Abductive reasoning is used to infer the most likely explanation for an observation. In medical AI, for instance, if a patient has symptoms A, B, and C, the system might infer a likely diagnosis D. This form of reasoning is helpful in uncertain or incomplete knowledge contexts.

Reasoning Approaches in AI

Rule-Based Systems

Early AI used explicit rules written by domain experts. These systems use “if-then” logic and were popular in expert systems like MYCIN and DENDRAL. They use forward chaining (data-driven) or backward chaining (goal-driven) inference strategies.

Forward chaining:

- Start from facts

- Apply rules to infer new facts

- Repeat until goal is found

Backward chaining:

- Start from a goal

- Work backward using rules

- Check if current facts satisfy rule premises

These systems are deterministic and interpretable but not flexible with noisy data.

Logic Programming

Logic programming languages like Prolog support reasoning through facts and rules. Prolog systems use unification and backtracking to search through logical space. This method is powerful for relational problems and natural language parsing.

Example:

Prolog

A query like sibling(mary, Who) will return tom.

Probabilistic Reasoning

Probabilistic models extend reasoning with uncertainty. Bayesian networks and Markov logic networks are used to combine probability with logic. This allows reasoning under incomplete or ambiguous data.

For example, Bayesian inference updates beliefs based on evidence:

Html

Such models are used in recommendation systems, robotics, and diagnostics.

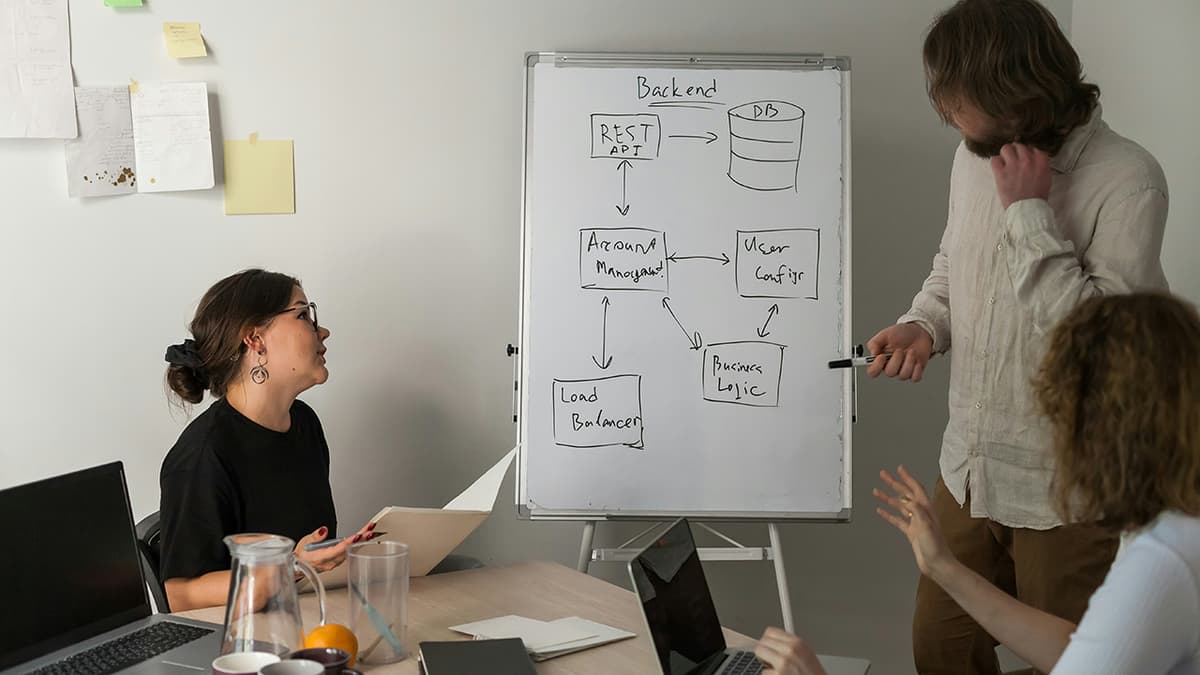

Knowledge Graphs and Ontologies

Modern AI often incorporates structured knowledge through knowledge graphs. Entities and their relationships are stored as triples (subject, predicate, object). Reasoning over these graphs uses graph traversal, rule applications, and embedding-based methods.

Example triple:

Html

Inference can fill missing data or answer complex queries through symbolic or neural reasoning.

Hybrid Reasoning

Combining symbolic reasoning with sub-symbolic (neural) models is becoming more common. Neuro-symbolic AI integrates the structure of reasoning with the pattern-matching power of deep learning. This hybrid model attempts to capture both logical inference and generalization capabilities.

Example applications include:

- Visual question answering

- Semantic parsing

- Robotics planning

Challenges

Despite progress, AI reasoning still faces key challenges:

- Scalability: Logic inference can become computationally expensive.

- Data quality: Garbage in, garbage out applies to reasoning as well.

- Ambiguity: Natural language and real-world concepts are not always logically clean.

- Integration: Bridging symbolic and neural models is complex.

AI reasoning enables machines to apply logic, learn rules, and draw conclusions beyond simple pattern detection. While rule-based systems remain relevant in specific domains, most progress is occurring in hybrid models that combine reasoning with learning. The goal is to build systems that not only predict but also explain and justify their conclusions based on structured thinking.