What Does "Pre-trained" Mean in GPT (Generative Pre-trained Transformer)?

We often discuss ChatGPT, and many are aware that GPT stands for Generative Pre-trained Transformer. But have you ever wondered what the term "pre-trained" really means in this context? Why is it pre-trained, and does this pre-training limit the performance of AI?

What is GPT?

Imagine GPT as an incredibly intelligent robot in the realm of computer programs. Its primary function is to comprehend language in a way similar to humans, enabling it to write, answer questions, and even craft stories or poems. This robot, while lacking physical arms and legs, possesses what can be likened to a super-brain. This super-brain is adept at processing words and sentences, allowing GPT to interact and respond in a manner that closely resembles human communication.

This advanced capability is not just about understanding and generating words. GPT's design allows it to grasp the nuances of language, like context, emotion, and even humor. It's like having a conversation with a very knowledgeable friend who not only understands what you're saying but also knows a lot about various topics and can reply in a meaningful way.

What Does "Pre-trained" Mean?

The "pre-trained" part is like the training phase for our GPT robot. Before it starts its job of writing or chatting, it needs to learn a lot about language. This learning process involves studying massive amounts of text - books, websites, articles, and more. By doing this, GPT learns how words and sentences are usually put together, what they mean, and how they can be used in different situations.

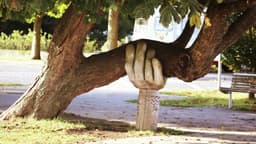

Imagine you're learning to play soccer. Before you start playing in actual games, you spend a lot of time practicing - kicking the ball, running, learning the rules, and watching others play. That's similar to what "pre-training" is for GPT. It's like its practice phase before the real game.

Why is GPT Pre-trained?

-

To Understand Language Better: Just like you need to practice soccer to play well, GPT needs to study a lot of text to understand language. This pre-training helps it make sense of what words mean and how they're used.

-

To Save Time and Effort Later: If every time someone wanted to use GPT they had to start from scratch and teach it all about language, it would take a long time! Pre-training GPT means it already knows a lot about language, so it can start doing its job faster.

-

To Be More Accurate and Useful: The more GPT learns during its pre-training, the better it gets at understanding and generating language. This means it can write more accurately, answer questions better, and be more helpful.

Reasons for Pre-training GPT

Pre-training GPT serves several important purposes, making it an invaluable tool in our interaction with technology:

-

Enhancing Conversations: By pre-training GPT, it gains the ability to engage in more human-like conversations. This training enables it to comprehend humor, respond to questions effectively, and offer advice, all based on its extensive language learning.

-

Fueling Creative Writing: The pre-training process equips GPT with the skills to craft stories, poems, and even compose news articles. It becomes like a robotic author, enriched by its exposure to a wide range of literature and writing styles.

-

Assisting in Academic and Professional Tasks: GPT's proficiency in language, honed through pre-training, allows it to assist in various tasks such as writing essays, summarizing complex articles, or translating different languages.

-

Enriching Educational Experiences: GPT can transform learning into a more engaging and fun activity. For example, it can create historical narratives set in ancient civilizations or write imaginative dialogues for science lessons.

The pre-training stage in GPT is a comprehensive phase where it absorbs an extensive amount of language knowledge. This phase is crucial as it enhances GPT's capabilities, making it more efficient and versatile in handling language-related tasks. Much like practicing a sport improves your skills, pre-training hones GPT's linguistic abilities. It exemplifies the potential of teaching machines to assist, educate, and entertain us in innovative and practical ways.