The Role of Embedding Models in Retrieval Augmented Generation

Imagine you're writing a story and get stuck at some point. You might go back to your favorite book or the internet to find inspiration or gather more information. Similarly, Retrieval Augmented Generation (RAG) allows AI models to "look up" relevant information while generating text. It's like the AI has access to a vast library of information it can search through to make its output more accurate and informative.

The Role of Embedding Models

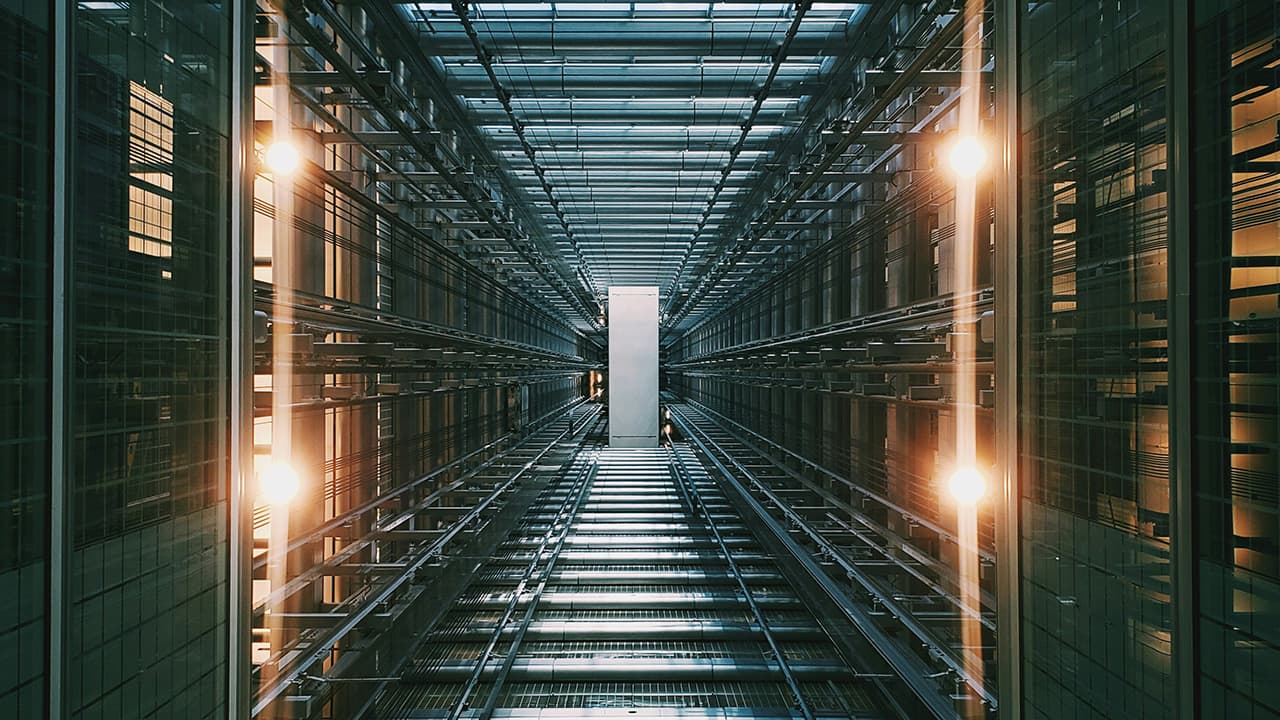

An embedding model is like the librarian in our AI's library. It organizes information so the AI can efficiently find what it needs. But instead of arranging books on shelves, it deals with data - lots of data. Here’s how it works:

- Understanding the Data

The embedding model takes the words, sentences, or paragraphs it sees in the data and converts them into a format the computer can understand better. It turns these texts into vectors (essentially, long lists of numbers). Each vector represents the essence of a word or sentence, capturing its meaning and how it relates to other words or sentences.

- Creating a Space of Knowledge

Imagine each vector as a point in space. Words or sentences with similar meanings are placed closer together, while those with different meanings are further apart. This space makes it possible for the AI to understand relationships and nuances in language.

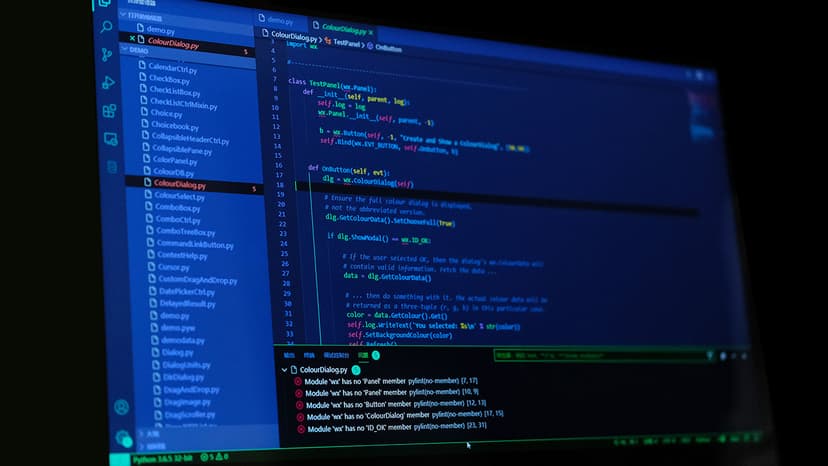

- Facilitating the Search

When the AI needs to generate text and requires additional information, it consults the embedding model. The AI provides a query (what it's looking for), which the embedding model also turns into a vector. Then, it searches this space to find the vectors (and therefore, pieces of information) closest to the query. It's like asking the librarian for books on a specific topic, and the librarian knows exactly where to look.

How Embedding Models Enhance RAG

Embedding models are crucial for making RAG efficient and effective. Here's why:

- Precision in Retrieval

By organizing information into a searchable space, embedding models ensure that the AI can find the most relevant information quickly. This relevance is key to generating accurate and informative text.

- Understanding Context

Words can have different meanings depending on their context. For example, "bank" can refer to a financial institution or the side of a river. Embedding models help AI understand these nuances by looking at how words are used in relation to others, enhancing the AI's ability to retrieve contextually appropriate information.

- Improving Over Time

As more data is processed and more queries are made, embedding models can adjust and refine their understanding of language. This means that the AI's ability to retrieve relevant information and generate accurate text improves over time.

Simplicity in Complexity

In the maze of technology that makes AI smart, the journey of RAG and embedding models might seem like a complex puzzle. But, the treasure at the end of this puzzle is clear and simple: we want AI to chat, narrate, and explain things as smoothly and smartly as a wise friend would. Imagine you're curious about dinosaurs, crafting a poem, or digging into history; embedding models equip AI with a flashlight to search through its massive digital library for exactly what you need, when you need it.

Think of it like this: every time you chat with AI, it's like it zips through the world's biggest library in seconds, pulls out the perfect book, and flips right to the page you're interested in. Whether it's spinning up a tale, answering a quirky question, or compiling a report, these models ensure AI doesn't just throw random facts at you. Instead, it weaves information together in a way that's not only right but also relevant and engaging, like a good story told by a friend. So, despite the behind-the-scenes complexity, the aim is beautifully straightforward: to craft AI-generated text that's as precise, rich, and naturally human as possible, transforming our interaction with machines into something more meaningful and human.