Is it safe to work with AI?

AI is becoming a significant part of various industries and impacting our daily lives. From autonomous vehicles to virtual assistants, AI technology seems promising. Yet, concerns about its safety and risks must be addressed. This article explores the question, "Is it safe to work with AI?" and discusses important aspects of AI safety.

Understanding the Risks

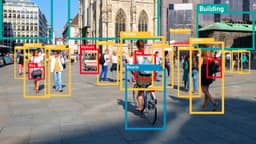

AI technology can bring many benefits but also poses certain risks. Key concerns include:

-

Job Automation: AI can automate tasks previously performed by humans, leading to job displacement in some professions.

-

Misinformation: AI algorithms can generate and spread fake news quickly, threatening the integrity of information in politics and public health.

-

Autonomous Weapons: AI-powered weaponry raises concerns about decision-making without human control, potentially escalating conflicts.

Ensuring Transparency and Explainability

Transparency and explainability are vital for safe AI use. AI systems should allow users to understand their decision-making processes, especially in critical areas like healthcare and finance. Trustworthy AI systems must be robust enough for scrutiny and validation.

Mitigating Risks and Ensuring Safety

Implementing measures to mitigate risks is crucial for safe AI use. Organizations like OpenAI conduct thorough testing and involve external experts for feedback on their AI models. This reflects a commitment to safety and rigorous monitoring.

Responsible and ethical AI practices also play a key role. Organizations should develop ethical guidelines for AI development and deployment, addressing algorithm bias, user privacy, and fairness.

The Human-Machine Collaboration

AI technologies can enhance human capabilities rather than replace them. This collaboration can lead to improved efficiency and innovation. AI can assist in tasks, creating new opportunities and job roles rather than eliminating them.

The Role of Regulation and Governance

Regulation and governance frameworks are crucial for the safe use of AI. Governments and regulatory bodies should establish guidelines to address ethical concerns and ensure transparency and accountability in AI technologies.

Working with AI presents both opportunities and risks. While AI has great potential, addressing safety concerns is essential. Transparency, explainability, and ethical practices are keys to mitigating risks. A collaborative approach between humans and machines, along with effective regulation and governance, is crucial for a secure AI environment.