Tackling the Scale: What Is Class Imbalance in Machine Learning?

Imagine you're on a seesaw, and on one end, there's a big ol' elephant, and on the other end, there's a tiny mouse. Clearly, the seesaw's going to be pretty lopsided, right? Well, class imbalance in machine learning is a bit like that seesaw. It's what happens when the classes in your data aren't represented equally, causing the scales to tip in favor of one class over the others.

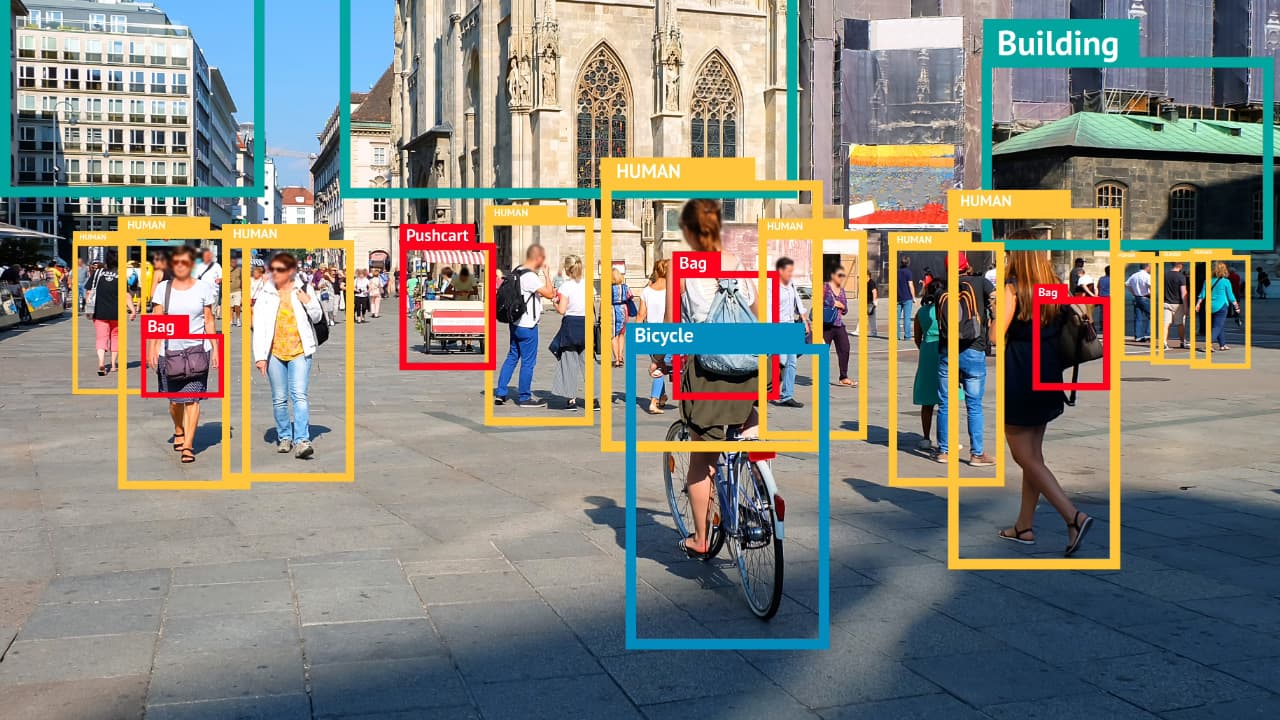

In our beautiful world of AI, machine learning algorithms learn from data to make predictions or decisions. These algorithms can be really nifty at finding patterns and giving us insights. But what if the data they're learning from is about as balanced as a hippo on a tightrope? That's when we have to deal with the pesky issue of class imbalance.

What Exactly is Class Imbalance?

Picture a dataset that you're using to train a machine learning model to detect if an email is spam or not. If out of a hundred emails, ninety-five are not spam and only five are spam, you've got yourself a classic case of class imbalance. Your algorithm might get lazy and assume that nearly all emails it comes across are not spam because that's what it mostly sees in the data. The minority class (spam, in this case) gets overshadowed and often misclassified because it wasn't represented enough during training.

Why Should We Care?

Well, let's say you're using that same model in your email application. If it starts flagging important emails as spam just because it wasn't trained on enough spam emails, that's going to be a mega headache for users. In essential applications, like medical diagnoses or fraud detection, the stakes are even higher. Missing out on detecting a rare disease or a fraudulent transaction because of class imbalance could have severe consequences.

The Balancing Act

The good news is, there are some pretty neat techniques to combat class imbalance and get our machine learning seesaw to a more equitable level. Here's a run-down of some popular methods:

1. Resampling

Resampling is like adding some weight to the mouse or lightening the elephant to balance the seesaw. We can either over-sample the minority class by making more copies of it (that's like cloning our mouse) or under-sample the majority class by taking some instances away (like putting the elephant on a diet).

2. Synthetic Data Generation

Think of synthetic data generation as creating a bunch of robot mice to beef up the numbers on the minority side. Techniques like SMOTE (Synthetic Minority Over-sampling Technique) create new, synthetic examples of the minority class to help even things out.

3. Algorithmic adjustments

Some algorithms come with special settings and dials that we can tweak to make them pay more attention to the minority class. It's like telling the algorithm, "Hey, don't ignore the mouse just because it's small!"

4. Different Performance Metrics

Sometimes, it's not about shifting the weight around but using a different way to measure success. Instead of accuracy, which can be misleading when dealing with class imbalance, we might focus on precision, recall, or the F1 score to get a better picture of how well our model is doing.

Challenges to Overcome

While the techniques above sound really cool, they're not without their own issues. Over-sampling can lead to overfitting, where our model becomes too fixated on the training data and doesn't perform well on new, unseen data. Under-sampling can lead to information loss since we're basically throwing away data that might have been useful.

Famous Faces

Even big companies like Google, Apple, and Microsoft grapple with class imbalance in the real world. They are constantly refining their methods to provide users with the most accurate and reliable machine learning applications.

The Road Ahead

Class imbalance is a speed bump on the road to creating robust and fair machine learning models. It challenges us to be more creative and thoughtful about how we handle our data and what methods we employ to ensure our algorithms are as equitable as they are intelligent.

The dance with data never ends, and machine learning enthusiasts are always coming up with innovative ways to tackle class imbalance. As we continue to learn and grow in this space, we're making sure that every mouse has its day and every elephant can share the seesaw without tipping the balance.