Why Does LangChain Cost So Many Tokens?

If you've heard about LangChain, you might also have heard whisperings of it consuming a boatload of tokens. But why is that the case? Tokens, in the world of Natural Language Processing (NLP), are the basic units of text handling, and understanding their usage can clarify the situation. Let’s explore why LangChain might devour so many tokens, using simple language and a sprinkle of creativity.

What Are Tokens?

Before we jump into LangChain's voracious appetite for tokens, let's get a grasp of what tokens are. In NLP, a token is an individual piece of a text that could be a word, part of a word, or even punctuation. For example, "LangChain is amazing!" could be broken into these tokens: "LangChain", "is", "amazing", and "!".

Tokens are essential because they are the building blocks that NLP algorithms use to understand and generate human language. Think of them as the tiny puzzle pieces that, when properly assembled, create a meaningful picture.

LangChain: The Basics

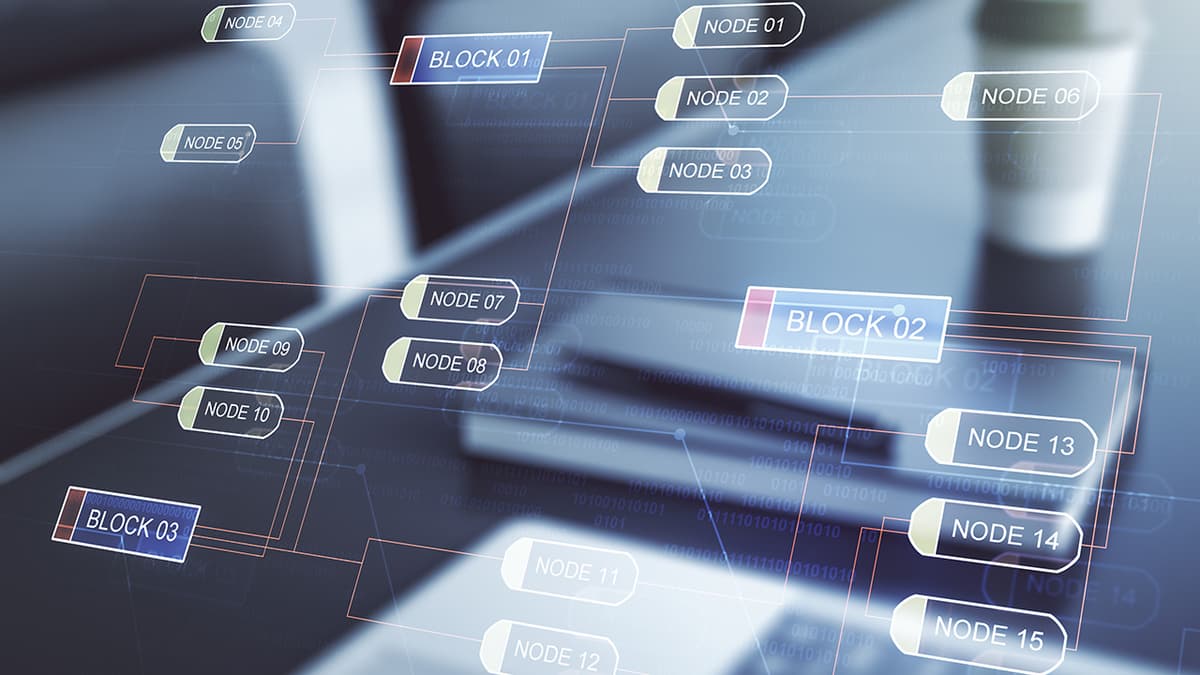

LangChain is an advanced NLP framework designed to link together large language models in a "chain" to perform complex tasks. Unlike simpler models that handle single tasks, LangChain models can process multiple, interrelated tasks, making them very powerful tools.

Why So Many Tokens?

Complex Interactions

LangChain involves multiple stages of processing. Each stage requires understanding its input, performing some operations, and then passing the result to the next stage. This chaining process means that LangChain needs to handle a lot of data, hence using lots of tokens to build the interactions.

Imagine writing a story. Each chapter builds on the previous one to create a comprehensive and engaging narrative. LangChain operates in a similar way. Each stage needs tokens from the previous stage to make sense of the entire task.

Detailed Contexts

NLP models rely heavily on context to make sense of language. When a request goes through LangChain, it requires ample context to understand what it needs to do at each step. The more complex the task, the more context is needed. This results in an increase in token usage.

For instance, if a task involves summarizing a lengthy document, the model will need a large number of tokens to comprehend the document's entire context accurately. This ensures the summary is coherent and accurate.

High Accuracy

High accuracy necessitates more tokens. LangChain aims to provide precise and accurate outputs. Achieving this means treating the language intricately, often requiring more tokens to ensure that no detail is overlooked. This level of detail and accuracy directly translates to higher token consumption.

Interactiveness

LangChain not only processes information but also interacts with it. It’s like having a conversation where both parties need to fully understand each other to reply aptly. This interactiveness, while powerful, requires even more tokens.

Dynamic Scaling

LangChain doesn’t stick to a fixed scope. It can scale dynamically based on the complexity and depth of the task. The more it scales, the more tokens it consumes. It's like a balloon that can expand in size to incorporate more air – in this case, tokens.

Advanced Algorithms

The algorithms behind LangChain are designed to be superior through their sophisticated methods. Sophisticated algorithms naturally deal with larger datasets and processes. The trade-off is an increase in the number of tokens required.

Iterative Processing

Sometimes LangChain goes through iterative processing, meaning it revisits previous stages to refine the output. Each iteration uses additional tokens to reassess and improve the task. Think of it like editing a draft multiple times to polish it – each pass fine-tunes the content, but it also takes time and resources.

Comprehensive Tasks

LangChain is built for comprehensive and sometimes convoluted tasks across multiple disciplines, not just basic question-answering. If you're working on complex solutions, like generating an interactive report, the depth and breadth of information processed will naturally consume more tokens.

Imagine working on a massive database where each query demands detailed interactions and data pulls. The complexity and scope of each task increase token usage.

LangChain costs many tokens because of its advanced, dynamic, and highly accurate processing capabilities. Its internal structure, designed to handle complex and multi-stage tasks, requires extensive token usage. While this might seem token-greedy, it is this very trait that equips LangChain to perform with excellence, providing highly detailed and interactive outputs.

Understanding this can give us a deeper appreciation of the magic behind LangChain – it's not just about the tokens it consumes, but the sophisticated and capable solutions it offers. As we utilize such advanced NLP frameworks, the value comes not just from efficiency but from the quality and depth of results that these tokens help achieve.