Why LLMs Struggle with Excel Files: The Challenges

Excel files are an essential tool for countless professionals, often used to store and analyze complex data in a grid format. But when large language models (LLMs) are tasked with processing these files, things can go awry. Instead of delivering accurate insights, LLMs frequently mix data from different rows, jumble information from various columns, or fail to properly understand the structure of the spreadsheet. This article will explore the key challenges that LLMs face when working with Excel files and why these issues persist.

The Intricacies of Excel's Data Structure

Excel files are organized into rows and columns, where each row typically represents a distinct record, and each column holds a specific data attribute. This structure is designed to make it easy for humans to read and analyze data. However, when an LLM encounters an Excel file, it treats the data as unstructured text, causing it to overlook the row-column relationships that give the data meaning.

Unlike natural language, where the order of words is crucial for understanding, Excel relies heavily on spatial relationships — the position of data in a cell, row, or column. For example, in a simple sales table, the first column might contain customer names, the second column might list purchase amounts, and the third column could indicate dates. The model, however, may not understand that "Alice," "1000," and "January" belong together in a single row, and instead, might treat them as individual, disconnected elements. This lack of spatial awareness is a core reason why LLMs often mix up data from different rows or misinterpret the relationships between columns.

The Text Processing Nature of LLMs

LLMs, including GPT-4o, are primarily trained on vast amounts of natural language data. This means they excel at understanding patterns in human language but are not naturally equipped to process structured data like that in an Excel sheet. The way LLMs are designed to predict the next word or phrase in a sentence doesn’t translate well to the predictable, grid-like format of a spreadsheet.

When an LLM reads an Excel file, it doesn't inherently know that data in a specific row should stay together or that the header of a column indicates the kind of information that follows. The model sees the content of each cell as an isolated unit rather than part of a larger whole. For example, a cell with a date like "2024-01-01" might be treated the same way as a product name or a price figure, simply because the model doesn’t understand that "date" has a unique significance.

Lack of Contextual Awareness

Context is vital for interpreting any kind of data, but it's even more crucial when dealing with structured information like an Excel sheet. Humans can immediately make sense of data tables because we have the ability to interpret the context from headers, labels, and the way the data is organized. An LLM, however, is only processing what’s in front of it, without any deeper understanding of what the data represents.

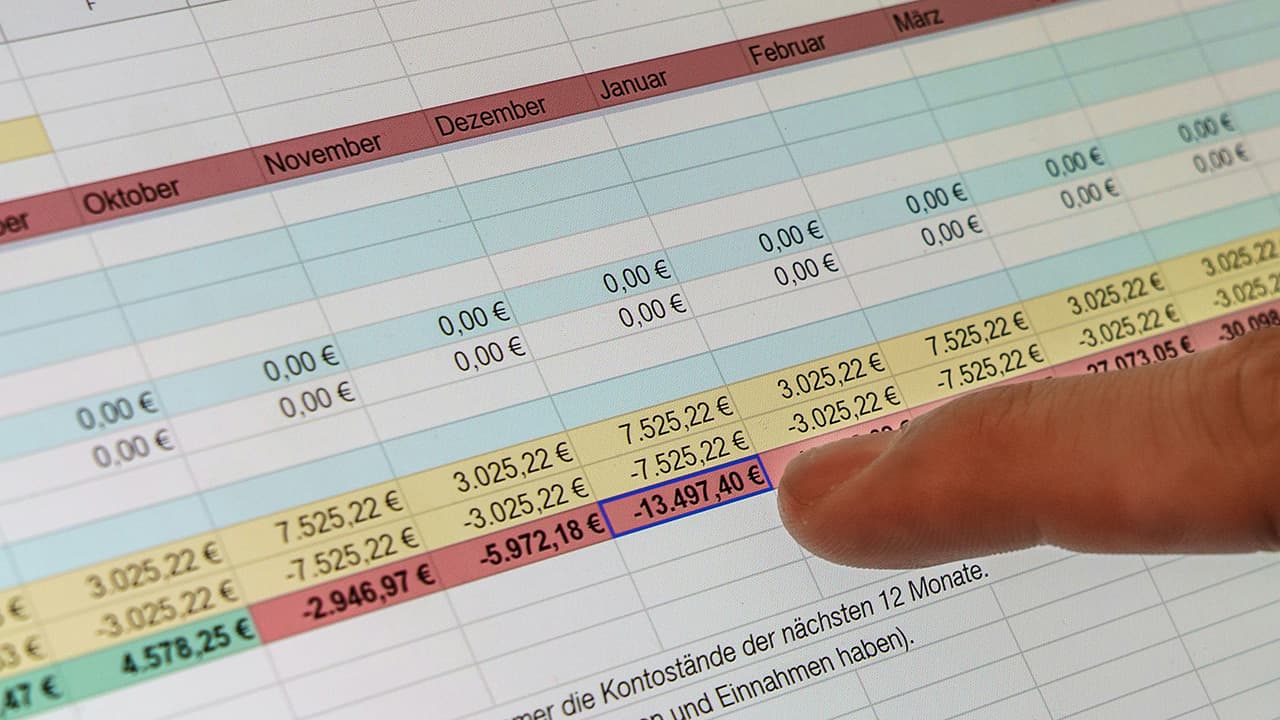

Consider an Excel sheet that tracks monthly expenses, with columns for "Category," "Amount," and "Date." To interpret this data correctly, the model must recognize that the "Amount" corresponds to a specific "Category" and is tied to a particular "Date." Without that contextual awareness, the model might mix values from different categories or incorrectly link amounts with dates, leading to inaccurate conclusions.

Parsing Issues and Inconsistent Data Formatting

Another challenge LLMs face is related to parsing the Excel file into a format the model can process. Excel files come in a variety of formats, with differences in how cells are formatted, how data is presented, and whether or not certain cells are merged. Merged cells or missing values can create problems, as the model may struggle to make sense of incomplete or irregular data.

Moreover, Excel files can include complex formulas, calculated fields, or embedded charts. These are designed to perform specific functions or present data in a summarized way, but LLMs typically can't process or interpret these in the same way that a human can. For instance, a model might fail to recognize a formula that calculates a total or mistakenly interpret the result of a formula as raw data, skewing the overall analysis.

The variety of ways people use Excel also adds a layer of complexity. Columns might be formatted differently in various files, or data might be organized in non-standard ways. Some cells could include text, while others contain numbers or dates, and some data might be stored in custom formats. This inconsistency only makes it harder for LLMs to generalize across different Excel files.

Large Volume of Data and Ambiguity

Excel files can often contain large volumes of data, sometimes extending to thousands of rows and columns. LLMs struggle with this scale because, unlike a human, they don’t naturally "zoom out" to see the bigger picture. When faced with a huge amount of information, the model might focus too much on small details while missing key trends or patterns in the data. For example, if a spreadsheet contains rows of sales transactions over several years, the model might incorrectly interpret individual rows or assume that the data is random, even though there might be obvious patterns that a human would catch.

Additionally, ambiguity in the data can cause problems. For instance, a column labeled "Status" could have entries like "Open," "Closed," or "Pending." Without further clarification, the model may misinterpret these entries or assign them the wrong meanings, leading to inaccurate answers.

The Role of Data Integrity and Noise

One more significant challenge is the integrity of the data itself. Excel sheets, like any data source, are susceptible to errors, inconsistencies, or "noise" — irrelevant or corrupt data that can mislead a model. Missing data, duplicate entries, or outliers that don’t follow the expected patterns can confuse the LLM, leading to erroneous outputs.

For example, if a dataset has missing or erroneous values in critical columns, the model may not be able to make sense of the relationships between the data points, leading to incorrect or incomplete analysis. When faced with noise, the model might attempt to extrapolate patterns that don’t actually exist, compounding the errors.

LLMs face significant challenges when processing Excel files due to the differences between text and structured data. Excel's grid structure, lack of contextual awareness, parsing issues, and noisy data all contribute to inaccuracies. While some of these challenges can be mitigated, the limitations remain substantial, and using LLMs effectively with Excel data often requires careful preprocessing and sometimes human oversight.

As these models continue to improve, users should be cautious when relying on them for complex or detailed Excel data analysis.