How Does Standard Scaling Impact Machine Learning Models?

Are you wondering about the effects of standard scaling on your machine learning models? Let's dive into this often inquired topic and explore how standard scaling can influence the performance of your models.

To begin with, standard scaling is a common preprocessing technique used in machine learning to standardize the range of independent variables or features of the data. This process involves transforming the data such that it has a mean of 0 and a standard deviation of 1. By doing so, standard scaling helps to eliminate the discrepancies in the scales of different features, allowing machine learning algorithms to make more accurate and efficient predictions.

When it comes to the impact of standard scaling on machine learning models, one of the primary advantages is that it helps algorithms that are sensitive to the magnitude of features converge faster during the training process. Models such as support vector machines (SVM), k-nearest neighbors (KNN), and principal component analysis (PCA) can benefit significantly from standard scaling as it enables them to reach convergence more quickly and make better predictions.

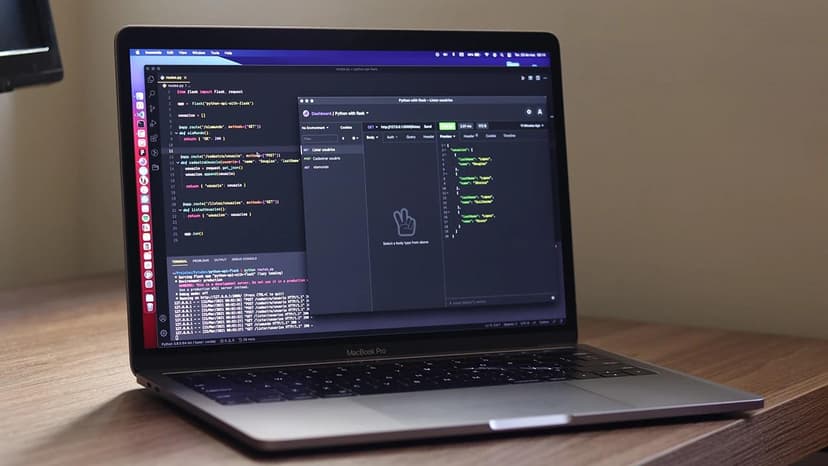

Let's illustrate this with a simple example using the popular Python library, scikit-learn. Consider a dataset with two features, "feature1" and "feature2", each with a different scale. We will first train a support vector machine model on the unscaled data and observe its performance:

Python

After running the code snippet above, you may notice that the model's accuracy is not optimal due to the disparity in feature scales. Let's now apply standard scaling to our data and observe the impact on the model's performance:

Python

Upon implementing standard scaling, you should observe an improvement in the model's accuracy as the features are now on the same scale, allowing the SVM algorithm to make better predictions.

Another crucial aspect to consider is the impact of outliers on machine learning models. Outliers, which are data points significantly different from the majority of the data, can have a detrimental effect on model performance. Standard scaling helps mitigate the influence of outliers by scaling the data based on the mean and standard deviation, making the algorithm more robust to extreme values.

Moreover, standard scaling is particularly beneficial for distance-based algorithms like KNN, where the distance between data points determines the model's predictions. By standardizing the features, the algorithm can calculate distances more accurately and identify meaningful patterns in the data.

In addition to improving model performance and handling outliers, standard scaling can also aid in visualizing and interpreting the data. When features are on the same scale, it becomes easier to compare and analyze their relationships, leading to better insights and decision-making in the machine learning process.

To further enhance your understanding of standard scaling and its impact on machine learning models, it is essential to explore different preprocessing techniques and how they compare to standard scaling. Techniques such as min-max scaling, robust scaling, and normalization offer alternative ways to preprocess data and can be more suitable for specific scenarios or algorithms.

Standard scaling plays a vital role in standardizing the range of features, improving model performance, handling outliers, and aiding in data visualization. By incorporating standard scaling into your machine learning workflows, you can enhance the accuracy and efficiency of your models, leading to more reliable predictions and valuable insights.

Now that we have explored the impact of standard scaling on machine learning models, why not experiment with different datasets and algorithms to see firsthand the benefits that standard scaling can offer? Upgrade your machine learning skills and elevate your model performance with this fundamental preprocessing technique.